The ‘Signal-to-Noise ratio’ (SNR) is a good indicator of how much detail (Signal) is captured relative to the amount of noise in an image.

DENOISE AI ISO

Noise is an artifact caused by digital sensors and is present in all digital images, no matter how low an ISO is used. What is Noise?īefore we get to the meat of this post, it’s important to understand what noise is and how NR works.

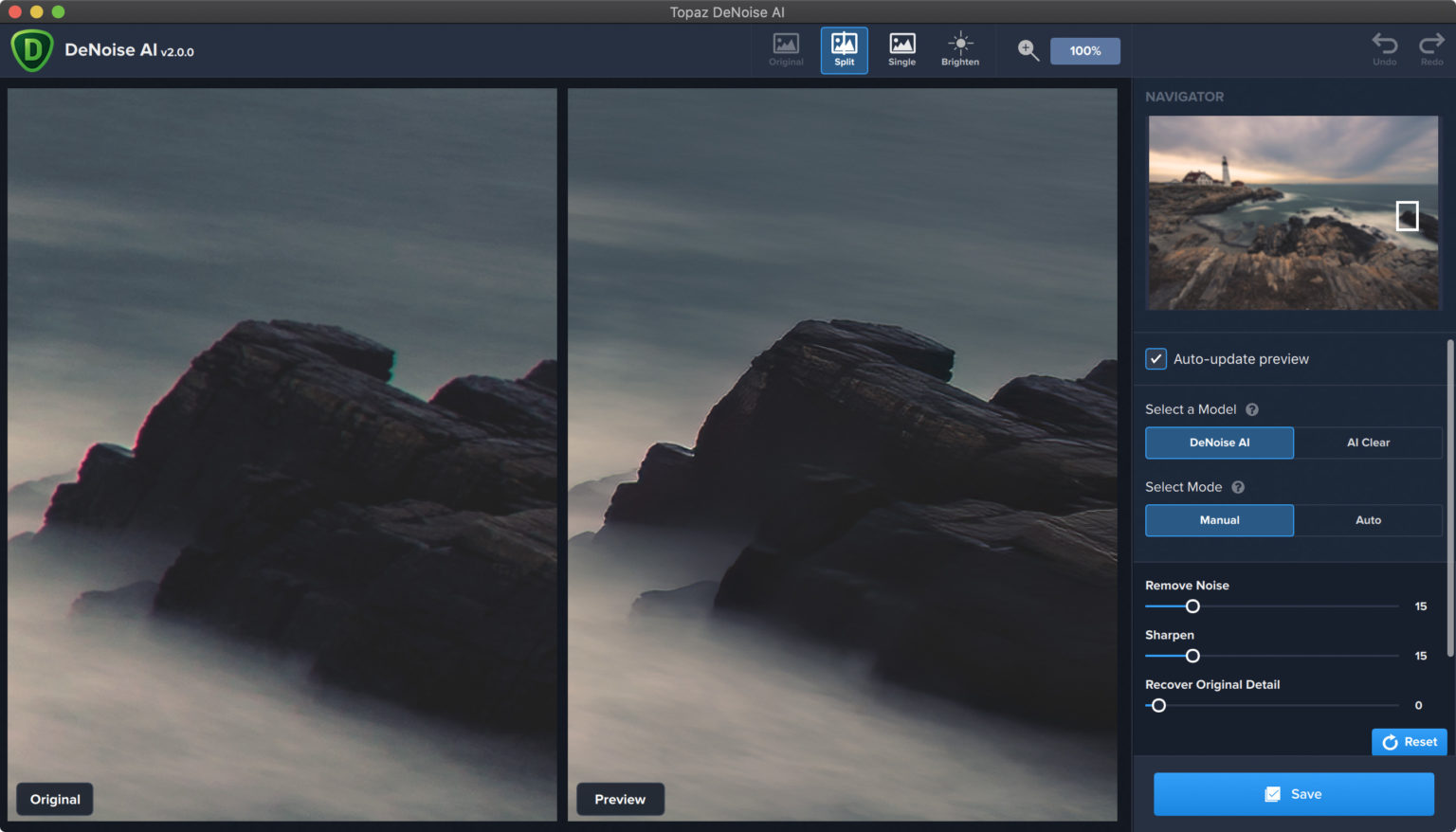

DENOISE AI MANUAL

It’s not as easy as it sounds since proper NR involves careful manual adjustments based on the level of noise present in the image, not just dragging a slider all the way to the right (and no, that does not constitute manual labor lol). No special preparation: 6 days uptime, lots of apps open behind Lightroom Classic.Noise Reduction (NR, for short, since that term’s gonna come up a lot in this post) is an oft-misunderstood topic. That still may have left large amounts of system memory available to the graphics/AI acceleration hardware. Lightroom Classic uses about 5.5GB memory through most of AI Denoise processing, but that more than doubles to 12–13GB near the end of AI Denoise. (Unified memory means the GPU integrated with the Apple Silicon SoC can use any amount of unused system memory.)

DENOISE AI PRO

MacBook Pro M1 Pro laptop (1.5 years old), 8 CPU cores and 14 GPU cores (yes, it’s the base model), with 32GB unified memory. I expect Adobe will optimize competitive performance further after this first release. It does not explain why AI Denoise might be slower than Topaz or others on the same images. Of course, this only looks at performance within Adobe AI Denoise alone. An older computer lacks both, forcing rendering to the CPU, which takes many times longer, and costs a lot more power and heat.

This looks very similar to video editing, where newer computers render video very quickly because they have both a powerful GPU and hardware acceleration for popular video codecs. In my tests, while the GPU is very busy, the CPU is very quiet during AI Denoise, with other background processes using more CPU than Lightroom Classic. That GPU model was released the same year as my laptop.) (I notice that D Fosse above only took 33 seconds using an RTX 3060 with 12GB VRAM. On the Windows side, I wonder if times are consistently faster using an NVIDIA GPU new enough to have their more recent AI acceleration hardware. The Neural Engine is only in Apple Silicon Mac processors, which might help explain why owners of older Intel CPU based Macs are similarly reporting much longer AI Denoise times in the minutes. And that machines with older AI acceleration hardware, or none at all, will have to take longer. My hardware list is at the end…really nothing special from a CPU/GPU point of view, so I strongly suspect it’s the Neural Engine machine learning hardware acceleration that cuts the times. I just did some tests and below is what I got. On my mid-range but recent laptop, I have so far never seen a Denoise time longer than a minute. Hardware acceleration specifically for machine learning/AI might be a missing link that helps explain why some computers can process AI features so much faster than others, and might help explain why older computers take much more time. I think the Ian Lyons post that Victoria linked to may hold part of the answer to this.

0 kommentar(er)

0 kommentar(er)